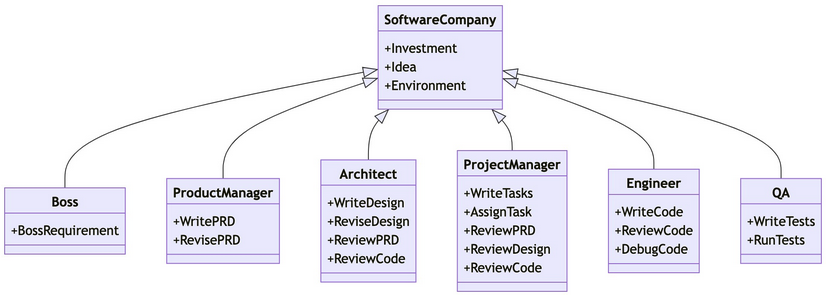

Next up on my list of projects to give a go is MetaGPT. It is described as:

- MetaGPT takes a one line requirement as input and outputs user stories / competitive analysis / requirements / data structures / APIs / documents, etc.

- Internally, MetaGPT includes product managers / architects / project managers / engineers. It provides the entire process of a software company along with carefully orchestrated SOPs.

Code = SOP(Team)is the core philosophy. We materialize SOP and apply it to teams composed of LLMs.

Setting it up is straightforward:

> git clone git@github.com:geekan/MetaGPT.git

> brew install npm

> sudo npm install -g @mermaid-js/mermaid-cli

> python3 -m venv venv

> source venv/bin/activate

> pip install -e.

> cp config/config.yaml config/key.yaml

// copy openAPI key into key.yaml

> python startup.py "Build me a hello GPT website"I should have been more specific – I just wanted a “hello world” website that printed “hello gpt” – instead if tried to make an interactive wrapper around GPT, heh. It set off for about 5 min generating output. A lengthy snippet is below:

2023-10-30 18:48:18.025 | INFO | metagpt.config:__init__:44 - Config loading done.

2023-10-30 18:48:21.951 | INFO | metagpt.software_company:invest:39 - Investment: $3.0.

2023-10-30 18:48:21.951 | INFO | metagpt.roles.role:_act:167 - Alice(Product Manager): ready to WritePRD

[CONTENT]

{

"Original Requirements": "Build a website that utilizes the GPT model to interact with users in a conversational manner.",

"Product Goals": ["Create an interactive user interface", "Efficiently integrate GPT model", "Ensure user-friendly experience"],

"User Stories": ["As a user, I want to interact with the GPT model directly from the website", "As a user, I want the website to have a clean and intuitive interface", "As a user, I want the website to respond quickly to my inputs", "As a user, I want to be able to get help or instructions if I'm stuck", "As a user, I want the website to be accessible from different devices"],

"Competitive Analysis": ["OpenAI's GPT-3 Playground: Provides a similar functionality but is not freely accessible", "ChatGPT: A dedicated chatbot service using GPT, but not customizable", "DeepAI Text Generator: Uses GPT-2, our product will use a more advanced model", "Hugging Face's Write With Transformer: Free service but lacks a dedicated UI", "InferKit: Offers similar services but requires account creation"],

"Competitive Quadrant Chart": "quadrantChart\n title Reach and engagement of campaigns\n x-axis Low Reach --> High Reach\n y-axis Low Engagement --> High Engagement\n quadrant-1 We should expand\n quadrant-2 Need to promote\n quadrant-3 Re-evaluate\n quadrant-4 May be improved\n OpenAI's GPT-3 Playground: [0.8, 0.9]\n ChatGPT: [0.7, 0.8]\n DeepAI Text Generator: [0.6, 0.7]\n Hugging Face's Write With Transformer: [0.5, 0.6]\n InferKit: [0.4, 0.5]\n Our Target Product: [0.5, 0.6]",

"Requirement Analysis": "The product requires a user-friendly interface for interaction with the GPT model. It should be responsive and accessible from different devices. The GPT model should be efficiently integrated to ensure quick responses.",

"Requirement Pool": [["P0","Create an interactive user interface"],["P0","Efficiently integrate GPT model"],["P1","Ensure the website is responsive"],["P1","Ensure the website is accessible from different devices"],["P2","Include a help or instructions section"]],

"UI Design draft": "The website should have a clean, minimalist design. The main element should be a large text input field where users can type their inputs. Below this, there should be a display area where the GPT model's responses are shown. There should also be a help or instructions button in a visible location.",

"Anything UNCLEAR": "The specific GPT model to be used is not specified. For this design, it is assumed that we are using GPT-3 or a similar advanced model."

}

[/CONTENT]

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

2023-10-30 18:49:36.494 | INFO | metagpt.provider.openai_api:update_cost:89 - Total running cost: $0.065 | Max budget: $3.000 | Current cost: $0.065, prompt_tokens: 898, completion_tokens: 632

2023-10-30 18:49:36.498 | INFO | metagpt.roles.role:_act:167 - Bob(Architect): ready to WriteDesign

[CONTENT]

{

"Implementation approach": "We will use Flask, a lightweight and flexible Python web framework, for the backend. For the frontend, we will use Bootstrap to ensure a responsive and user-friendly interface. The GPT model will be integrated using the transformers library from Hugging Face, which provides pre-trained models and is easy to use. We will use AJAX for sending asynchronous requests from the frontend to the backend, ensuring a smooth user experience.",

"Python package name": "gpt_interactive_web",

"File list": ["main.py", "templates/index.html", "static/style.css", "static/script.js"],

"Data structures and interface definitions": "classDiagram\n class Main{\n +Flask app\n +str model_name\n +transformers.GPT2LMHeadModel model\n +transformers.GPT2Tokenizer tokenizer\n +__init__(model_name: str)\n +load_model()\n +generate_text(input_text: str): str\n }\n Main --|> Flask: Inherits",

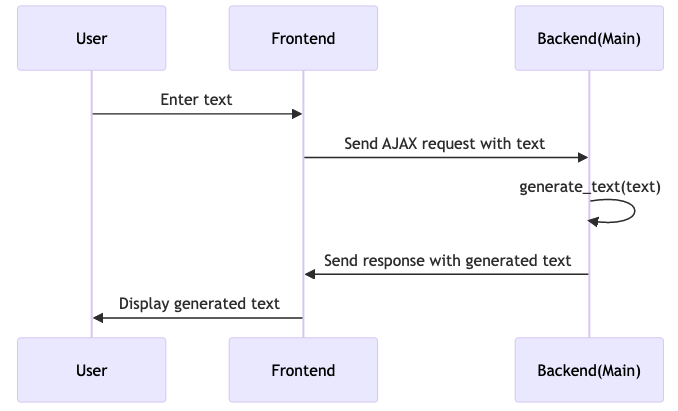

"Program call flow": "sequenceDiagram\n participant U as User\n participant F as Frontend\n participant B as Backend(Main)\n U->>F: Enter text\n F->>B: Send AJAX request with text\n B->>B: generate_text(text)\n B->>F: Send response with generated text\n F->>U: Display generated text",

"Anything UNCLEAR": "The requirement is clear to me."

}

[/CONTENT]

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

2023-10-30 18:50:13.252 | INFO | metagpt.provider.openai_api:update_cost:89 - Total running cost: $0.119 | Max budget: $3.000 | Current cost: $0.054, prompt_tokens: 1147, completion_tokens: 323

2023-10-30 18:50:13.278 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/competitive_analysis.pdf..

2023-10-30 18:50:43.731 | ERROR | metagpt.utils.mermaid:mermaid_to_file:69 -

TimeoutError: Timed out after 30000 ms while waiting for the WS endpoint URL to appear in stdout!

at ChromeLauncher.launch (file:///opt/homebrew/lib/node_modules/@mermaid-js/mermaid-cli/node_modules/puppeteer-core/lib/esm/puppeteer/node/ProductLauncher.js:119:23)

at async run (file:///opt/homebrew/lib/node_modules/@mermaid-js/mermaid-cli/src/index.js:404:19)

at async cli (file:///opt/homebrew/lib/node_modules/@mermaid-js/mermaid-cli/src/index.js:184:3)

2023-10-30 18:50:43.733 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/competitive_analysis.svg..

2023-10-30 18:50:46.644 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:46.644 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/competitive_analysis.png..

2023-10-30 18:50:47.753 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:47.754 | INFO | metagpt.actions.design_api:_save_prd:175 - Saving PRD to /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/docs/prd.md

2023-10-30 18:50:47.758 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/data_api_design.pdf..

2023-10-30 18:50:49.004 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:49.005 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/data_api_design.svg..

2023-10-30 18:50:50.077 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:50.077 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/data_api_design.png..

2023-10-30 18:50:51.180 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:51.183 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/seq_flow.pdf..

2023-10-30 18:50:52.269 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:52.269 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/seq_flow.svg..

2023-10-30 18:50:53.343 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:53.343 | INFO | metagpt.utils.mermaid:mermaid_to_file:43 - Generating /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/resources/seq_flow.png..

2023-10-30 18:50:54.505 | INFO | metagpt.utils.mermaid:mermaid_to_file:67 - Generating single mermaid chart

2023-10-30 18:50:54.506 | INFO | metagpt.actions.design_api:_save_system_design:188 - Saving System Designs to /Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/docs/system_design.md

2023-10-30 18:50:54.510 | INFO | metagpt.roles.role:_act:167 - Eve(Project Manager): ready to WriteTasks

[CONTENT]

{

"Required Python third-party packages": [

"flask==1.1.2",

"transformers==4.5.1",

"bootstrap==4.6.0"

],

"Required Other language third-party packages": [

"No third-party packages required for other languages."

],

"Full API spec": """

openapi: 3.0.0

info:

title: GPT Interactive Web API

version: 1.0.0

paths:

/generate:

post:

summary: Generate text based on input

requestBody:

required: true

content:

application/json:

schema:

type: object

properties:

input_text:

type: string

responses:

'200':

description: A JSON object containing the generated text

content:

application/json:

schema:

type: object

properties:

generated_text:

type: string

""",

"Logic Analysis": [

["main.py","Main class, load_model method, generate_text method"],

["templates/index.html","User interface elements"],

["static/style.css","Styling for the user interface"],

["static/script.js","AJAX request to backend"]

],

"Task list": [

"main.py",

"templates/index.html",

"static/style.css",

"static/script.js"

],

"Shared Knowledge": """

'main.py' contains the Main class which loads the GPT model and generates text based on user input.

'templates/index.html' is the main user interface where users can enter their input text.

'static/style.css' contains the styles for the user interface.

'static/script.js' handles the AJAX request from the frontend to the backend.

""",

"Anything UNCLEAR": "The requirements are clear. The main entry point will be 'main.py'. The Flask app will be initialized and the GPT model will be loaded in the Main class constructor. The generate_text method will handle the AJAX request from the frontend."

}

[/CONTENT]

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

2023-10-30 18:51:43.570 | INFO | metagpt.provider.openai_api:update_cost:89 - Total running cost: $0.171 | Max budget: $3.000 | Current cost: $0.052, prompt_tokens: 847, completion_tokens: 444

0

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

2023-10-30 18:51:45.530 | INFO | metagpt.provider.openai_api:update_cost:89 - Total running cost: $0.220 | Max budget: $3.000 | Current cost: $0.049, prompt_tokens: 1642, completion_tokens: 1

2023-10-30 18:51:45.531 | INFO | metagpt.actions.write_code:run:77 - Writing

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

2023-10-30 18:52:12.177 | INFO | metagpt.provider.openai_api:update_cost:89 - Total running cost: $0.269 | Max budget: $3.000 | Current cost: $0.049, prompt_tokens: 1057, completion_tokens: 289

2023-10-30 18:52:12.195 | INFO | metagpt.actions.write_code_review:run:77 - Code review main.py..This eventually finished – lets checkout the product. Inside the workspace dir is my project. It looks like this:

.

├── docs

│ ├── api_spec_and_tasks.md

│ ├── prd.md

│ └── system_design.md

├── gpt_interactive_web

│ ├── main.py

│ ├── static

│ │ ├── script.js

│ │ └── style.css

│ └── templates

│ └── index.html

├── requirements.txt

└── resources

├── competitive_analysis.mmd

├── competitive_analysis.png

├── competitive_analysis.svg

├── data_api_design.mmd

├── data_api_design.pdf

├── data_api_design.png

├── data_api_design.svg

├── seq_flow.mmd

├── seq_flow.pdf

├── seq_flow.png

└── seq_flow.svgLets create a new shell and set this project up and see what happens:

> cd MetaGPT/workspace/gpt_interactive_web

> python3 -m venv venv

> source venv/bin/activate

> pip install -r requirements.txt

Collecting flask==1.1.2 (from -r requirements.txt (line 1))

Downloading Flask-1.1.2-py2.py3-none-any.whl (94 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 94.6/94.6 kB 1.5 MB/s eta 0:00:00

Collecting transformers==4.5.1 (from -r requirements.txt (line 2))

Downloading transformers-4.5.1-py3-none-any.whl (2.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.1/2.1 MB 7.9 MB/s eta 0:00:00

ERROR: Could not find a version that satisfies the requirement bootstrap==4.6.0 (from versions: none)

ERROR: No matching distribution found for bootstrap==4.6.0

So, didn’t like that. Trying to get a list of valid versions from bootstrap gives an unknown package:

> pip index versions bootstrap

WARNING: pip index is currently an experimental command. It may be removed/changed in a future release without prior warning.

ERROR: No matching distribution found for bootstrap

[notice] A new release of pip is available: 23.2.1 -> 23.3.1

[notice] To update, run: pip install --upgrade pipOK so hah, who knows what bootstrap is suppose to be. Lets examine what it produced. (I upgraded pip just in case, no dice)

Lets start at the top – Here is the PRD it generated:

## Original Requirements

Build a website that utilizes the GPT model to interact with users in a conversational manner.

## Product Goals

- Create an interactive user interface

- Efficiently integrate GPT model

- Ensure user-friendly experience

## User Stories

- As a user, I want to interact with the GPT model directly from the website

- As a user, I want the website to have a clean and intuitive interface

- As a user, I want the website to respond quickly to my inputs

- As a user, I want to be able to get help or instructions if I'm stuck

- As a user, I want the website to be accessible from different devices

## Competitive Analysis

- OpenAI's GPT-3 Playground: Provides a similar functionality but is not freely accessible

- ChatGPT: A dedicated chatbot service using GPT, but not customizable

- DeepAI Text Generator: Uses GPT-2, our product will use a more advanced model

- Hugging Face's Write With Transformer: Free service but lacks a dedicated UI

- InferKit: Offers similar services but requires account creation

## Competitive Quadrant Chart

quadrantChart

title Reach and engagement of campaigns

x-axis Low Reach --> High Reach

y-axis Low Engagement --> High Engagement

quadrant-1 We should expand

quadrant-2 Need to promote

quadrant-3 Re-evaluate

quadrant-4 May be improved

OpenAI's GPT-3 Playground: [0.8, 0.9]

ChatGPT: [0.7, 0.8]

DeepAI Text Generator: [0.6, 0.7]

Hugging Face's Write With Transformer: [0.5, 0.6]

InferKit: [0.4, 0.5]

Our Target Product: [0.5, 0.6]

## Requirement Analysis

The product requires a user-friendly interface for interaction with the GPT model. It should be responsive and accessible from different devices. The GPT model should be efficiently integrated to ensure quick responses.

## Requirement Pool

- ['P0', 'Create an interactive user interface']

- ['P0', 'Efficiently integrate GPT model']

- ['P1', 'Ensure the website is responsive']

- ['P1', 'Ensure the website is accessible from different devices']

- ['P2', 'Include a help or instructions section']

## UI Design draft

The website should have a clean, minimalist design. The main element should be a large text input field where users can type their inputs. Below this, there should be a display area where the GPT model's responses are shown. There should also be a help or instructions button in a visible location.

## Anything UNCLEAR

The specific GPT model to be used is not specified. For this design, it is assumed that we are using GPT-3 or a similar advanced model.And the Competitive Quadrant Chart:

And the Sequence Flow Diagram:

Certainly pretty cool – lets just go back and see if we can run this. Getting lazy so lets just cheat and get it working.

> python main.py

Traceback (most recent call last):

File "/Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/gpt_interactive_web/main.py", line 2, in <module>

from flask import Flask, request, jsonify

ModuleNotFoundError: No module named 'flask'

> pip install flask

> python main.py

Traceback (most recent call last):

File "/Users/sk/Research/MetaGPT/workspace/gpt_interactive_web/gpt_interactive_web/main.py", line 3, in <module>

from transformers import GPT2LMHeadModel, GPT2Tokenizer

ModuleNotFoundError: No module named 'transformers'

> pip install transformers

> python main.py

None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won't be available and only tokenizers, configuration and file/data utilities can be used.

Downloading (…)olve/main/vocab.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.04M/1.04M [00:00<00:00, 13.6MB/s]

Downloading (…)olve/main/merges.txt: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 456k/456k [00:00<00:00, 16.3MB/s]

Downloading (…)/main/tokenizer.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.36M/1.36M [00:00<00:00, 15.5MB/s]

Downloading (…)lve/main/config.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 665/665 [00:00<00:00, 4.46MB/s]

Error loading model:

GPT2LMHeadModel requires the PyTorch library but it was not found in your environment. Checkout the instructions on the

installation page: https://pytorch.org/get-started/locally/ and follow the ones that match your environment.

Please note that you may need to restart your runtime after installation.

* Serving Flask app 'main'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on http://127.0.0.1:5000

Press CTRL+C to quit

* Restarting with stat

None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won't be available and only tokenizers, configuration and file/data utilities can be used.

Error loading model:

GPT2LMHeadModel requires the PyTorch library but it was not found in your environment. Checkout the instructions on the

installation page: https://pytorch.org/get-started/locally/ and follow the ones that match your environment.

Please note that you may need to restart your runtime after installation.

* Debugger is active!

* Debugger PIN: 564-903-568

127.0.0.1 - - [30/Oct/2023 19:20:57] "GET / HTTP/1.1" 404 -

127.0.0.1 - - [30/Oct/2023 19:20:58] "GET /favicon.ico HTTP/1.1" 404 -

127.0.0.1 - - [30/Oct/2023 19:21:50] "GET / HTTP/1.1" 404 -

127.0.0.1 - - [30/Oct/2023 19:22:01] "GET /index.html HTTP/1.1" 404 -

127.0.0.1 - - [30/Oct/2023 19:22:15] "GET /templates/index.html HTTP/1.1" 404 -

127.0.0.1 - - [30/Oct/2023 19:22:29] "GET /generate HTTP/1.1" 405 -

127.0.0.1 - - [30/Oct/2023 19:22:44] "GET / HTTP/1.1" 404 -Had trouble resolving a GET to the root of this app. This is the code we are running:

## main.py

from flask import Flask, request, jsonify

from transformers import GPT2LMHeadModel, GPT2Tokenizer

class Main(Flask):

def __init__(self, model_name: str = 'gpt2', import_name: str = __name__):

super().__init__(import_name)

self.model_name = model_name

self.model = None

self.tokenizer = None

self.load_model()

@self.route('/generate', methods=['POST'])

def generate():

try:

data = request.get_json()

input_text = data.get('input_text', '')

generated_text = self.generate_text(input_text)

return jsonify({'generated_text': generated_text})

except Exception as e:

return jsonify({'error': str(e)})

def load_model(self):

try:

self.tokenizer = GPT2Tokenizer.from_pretrained(self.model_name)

self.model = GPT2LMHeadModel.from_pretrained(self.model_name)

except Exception as e:

print(f"Error loading model: {e}")

def generate_text(self, input_text: str) -> str:

try:

inputs = self.tokenizer.encode(input_text, return_tensors='pt')

outputs = self.model.generate(inputs, max_length=150, num_return_sequences=1)

generated_text = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

return generated_text

except Exception as e:

print(f"Error generating text: {e}")

if __name__ == "__main__":

app = Main('gpt2')

app.run(debug=True)Looking at the code, there doesn’t seem a default route / or GET support. There is a route for /generate – lets try hitting that with curl.

terminal 1:

curl -X POST http://127.0.0.1:5000/generate -H "Content-Type:application/json" -d '{"input_text": "tell me a joke"}'

{

"generated_text": null

}

terminal 2:

Error generating text: Unable to convert output to PyTorch tensors format, PyTorch is not installed.

127.0.0.1 - - [30/Oct/2023 19:26:06] "POST /generate HTTP/1.1" 200 -OK, lets install pytorch.

> pip install torch

> python main.py

curl -X POST http://127.0.0.1:5000/generate -H "Content-Type:application/json" -d '{"input_text": "tell me a joke"}'

{

"generated_text": "tell me a joke, but I'm not going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do. I'm going to tell you what I'm going to do."

}haha ok so it’s late and this things been drinking. Until next time, sk out!